What is Parallel Computing

Overview

Teaching: 30 min

Exercises: 15 minQuestions

How do we execute a task in parallel?

What benefits arise from parallel execution?

What are the limits of gains from execution in parallel?

What is the difference between implicit and explicit parallelisation.

Objectives

Prepare a job submission script for the parallel executable.

Methods of Parallel Computing

To understand the different types of Parallel Computing we first need to clarify some terms.

CPU: Unit that does the computations.

Task: Like a thread, but multiple tasks do not need to share memory.

Node: A single computer of the cluster. Nodes are made up of CPUs and RAM.

Shared Memory: When multiple CPUs are used within a single task.

Distributed Memory: When multiple tasks are used.

Which methods are available to you is largely dependent on the nature of the problem and software being used.

Shared-Memory (SMP)

Shared-memory multiproccessing divides work among CPUs or threads, all of these threads require access to the same memory.

Often called Multithreading.

This means that all CPUs must be on the same node, most Mahuika nodes have 128 CPUs.

Shared memory parallelism is used in our example script sum_matrix.r.

Number of threads to use is specified by the Slurm option --cpus-per-task.

Distributed-Memory (MPI)

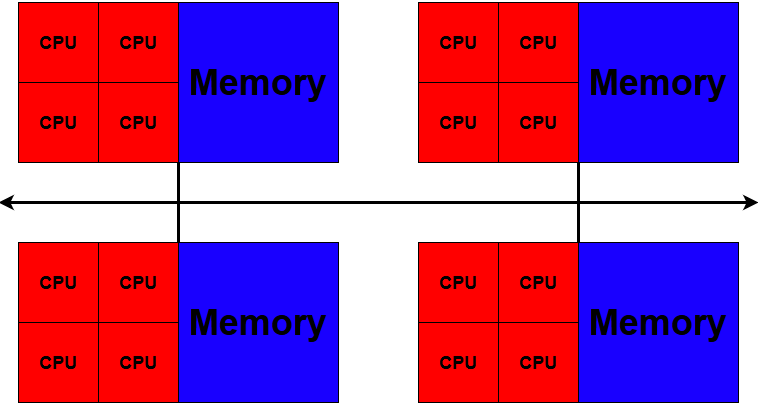

Distributed-memory multiproccessing divides work among tasks, a task may contain multiple CPUs (provided they all share memory, as discussed previously).

Message Passing Interface (MPI) is a communication standard for distributed-memory multiproccessing. While there are other standards, often ‘MPI’ is used synonymously with Distributed parallelism.

Each task has it’s own exclusive memory, tasks can be spread across multiple nodes, communicating via and interconnect. This allows MPI jobs to be much larger than shared memory jobs. It also means that memory requirements are more likely to increase proportionally with CPUs.

Distributed-Memory multiproccessing predates shared-memory multiproccessing, and is more common with classical high performance applications (older computers had one CPU per node).

Number of tasks to use is specified by the Slurm option --ntasks, because the number of tasks ending up on one node is variable you should use --mem-per-cpu rather than --mem (mem per node) to ensure each task has enough.

Using a combination of Shared and Distributed memory is called Hybrid Parallel.

srun

In order to use distrubuted memory parallelism under Slurm, most commands must be prefixed with

srun.For example

srun Rscript sum_matrix.r.We do not need to prefix commands that do not need access to the distrubuted CPUs, ‘echo’, ‘module load’, etc. Some scientific software will have an equivalent built in and may not require

srun, The best place to look for this information is the software user documentation, or the NeSI support docs.

Embarrassingly Parallel

Possible when tasks are independant and no communication between them is required.

e.g. parameter sweeps and Monte Carlo simulations.

Can be thought of less as running a single job in parallel and more about running multiple serial-jobs simultaneously. Often this will involve running the same process on multiple inputs.

Embarrassingly parallel jobs should be able to scale without any loss of efficiency. If this type of parallelisation is an option, it will almost certainly be the best choice.

The best way to run embarrassingly parallel jobs in Slurm is using a Job Array. Job arrays allow one script to be executed many times in a way that is convenient to submit and manage.

A job array can be specified using --array

If you are specifying --output you will probably want to make use of the %a token, so that each output file contains the array ID.

e.g. --output %x_%a.out will create the output <job name>_<array ID>.out.

If you are writing your own code, then this is something you will probably have to specify yourself.

How to Utilise Multiple CPUs

Requesting extra resources through Slurm only means that more resources will be available, it does not guarantee your program will be able to make use of them.

Generally speaking, Parallelism is either implicit where the software figures out everything behind the scenes, or explicit where the software requires extra direction from the user.

Scientific Software

The first step when looking to run particular software should always be to read the documentation. On one end of the scale, some software may claim to make use of multiple cores implicitly, but this should be verified as the methods used to determine available resources are not guaranteed to work.

Some software will require you to specify number of cores (e.g. -n 8 or -np 16), or even type of paralellisation (e.g. -dis or -mpi=intelmpi).

Occasionally your input files may require rewriting/regenerating for every new CPU combintation (e.g. domain based parallelism without automatic partitioning).

Writing Code

Occasionally requesting more CPUs in your Slurm job is all that is required and whatever program you are running will automagically take advantage of the additional resources. However, it’s more likely to require some amount of effort on your behalf.

It is important to determine this before you start requesting more resources through Slurm

If you are writing your own code, some programming languages will have functions that can make use of multiple CPUs without requiring you to changes your code. However, unless that function is where the majority of time is spent, this is unlikely to give you the performance you are looking for.

Python: Multiproccessing (not to be confused with threading which is not really parallel.)

MATLAB: Parpool

Summary

| Name | Other Names | Slurm Option | Pros/cons |

|---|---|---|---|

| Shared Memory Parallelism | Multithreading, Multiproccessing | --cpus-per-task |

does not have to use interconnect/can only use max one node |

| Distrubuted Memory Parallelism | MPI, OpenMPI | --ntasks and add srun before command |

can use any number of nodes/does have to use interconnect |

| Hybrid | --ntasks and --cpus-per-task and add srun before command |

best of both!/can be tricky to set-up and get right | |

| Job Array | --array |

most efficient form of parallelism/not as flexible and requires more manual set-up in Slurm script | |

| General Purpose GPU | --gpus-per-node |

GPUs can accelerate your code A LOT/sometimes GPUs are all busy |

Running a Parallel Job.

Pick one of the method of Paralellism mentioned above, and modify your

example.slscript to use this method.Solution

What does the printout say at the start of your job about number and location of node.

Key Points

Parallel programming allows applications to take advantage of parallel hardware; serial code will not ‘just work.’

There are multiple ways you can run